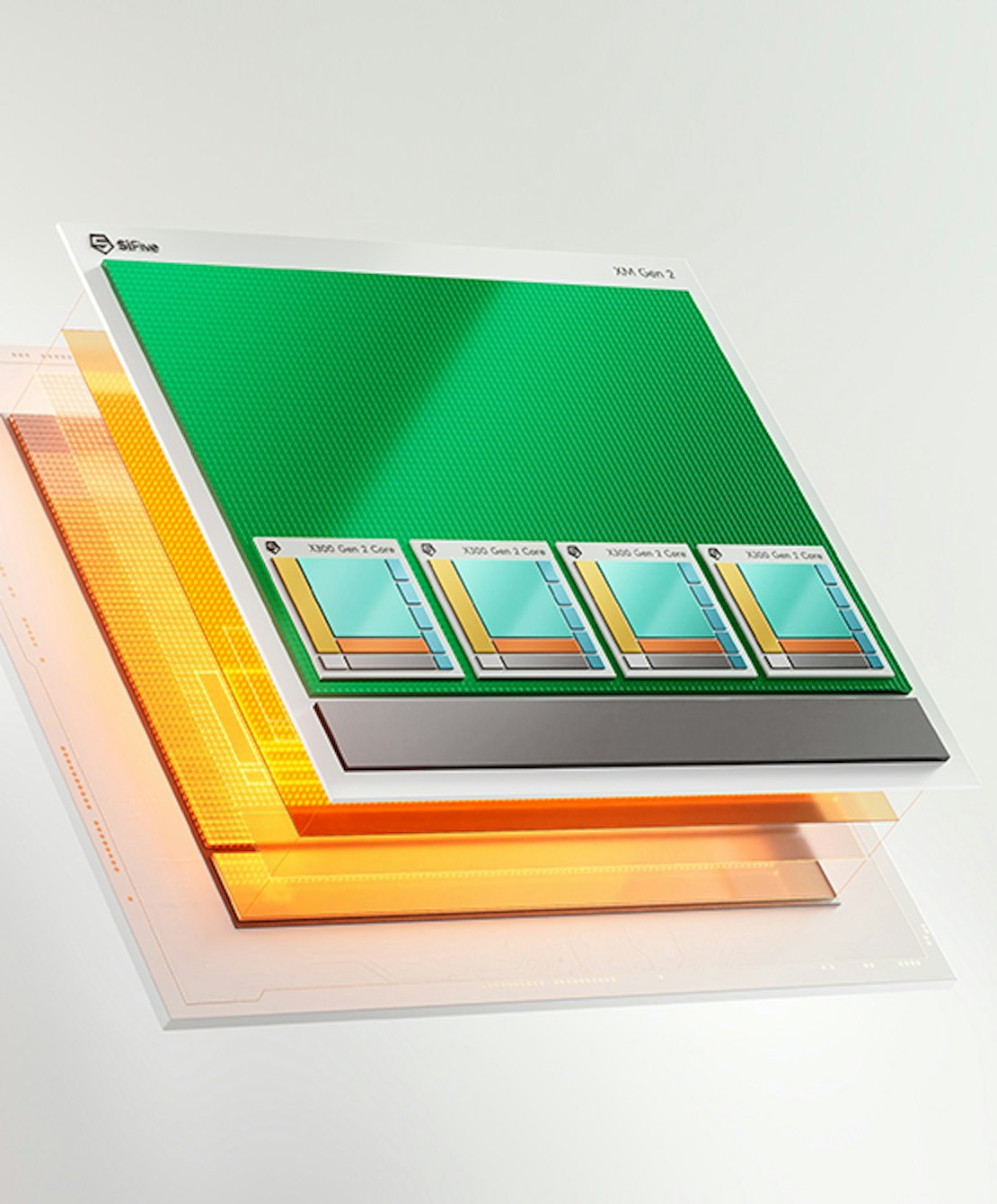

SiFive 的 XM 系列 Gen 2 提供极具可扩展性和高效的 AI 计算引擎,以满足广泛终端市场应用的需求。通过整合标量、向量和矩阵引擎,XM 系列用户可充分利用高效的内存带宽。XM 系列延续了 SiFive 的传统,在各种计算密集型工作负载中提供极高的每瓦性能,并针对大语言模型 (LLM) 进行了深度优化。为加快开发进度,SiFive 还将开源 SiFive Kernel Library。

SiFive Intelligence

XM 系列

SiFive Intelligence

XM 系列 Gen 2

SiFive Intelligence

XM 系列 Gen 2 主要特性

第二代新增功能

- 高度可扩展,可覆盖广泛的终端市场应用

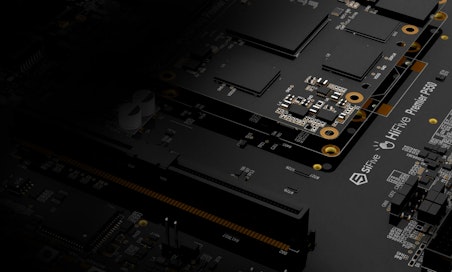

- 集成 4 个 X300 Gen 2 核心,其中 1-4 个作为 XM 矩阵引擎的加速器控制单元

- 全新 XM Gen 2 矩阵引擎升级

- 在多种工作负载下性能提升

- 新增数据类型支持

- 已针对大语言模型 (LLM) 进行深度优化

SiFive 矩阵引擎

- 宽外积设计

- 与 4 个 X 核心紧密集成

- 与向量单元深度融合

每个集群 4 个 X 核心

- 每个核心配备双向量单元

- 执行所有其他层,例如激活函数

- 新增指数加速指令

新增矩阵指令

- 由标量单元获取

- 源数据来自向量寄存器

- 目标写入每个矩阵累加器

1 个集群 = 每 GHz 16 TOPS (INT8)、8 TFLOPS (BF16)

每个 XM 系列集群持续带宽 1TB/s

XM 集群以两种方式连接内存:

- 共享内存端口用于缓存访问,保持 4 个内部 X 核心间的一致性

- 每个 X 核心配备专用高带宽端口用于无缓存访问,保证全带宽可用

主机 CPU 可为 RISC-V、x86 或 Arm (或者不需要主机)

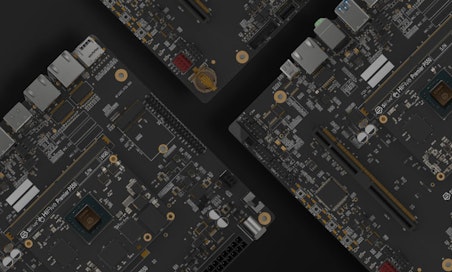

SiFive Intelligence 系列

X100 系列

Intelligence

- 32 位或 64 位 CPU

- 128 位向量长度

- SSCI 和 VCIX

X200 系列

Intelligence

- 512 位向量长度

- 单向量算术逻辑单元

- SSCI 和 VCIX (1024 位)

X300 系列

Intelligence

- 1024 位向量长度

- 单/双向量算术逻辑单元

- SSCI 和 VCIX (2048 位)

联系销售人员

核心 IP 系列

Automotive 系列

SiFive Automotive™ 系列提供多款高端应用型与实时处理器,具备业界领先的性能表现、极小的芯片面积与低功耗特性,并通过 ISO 21434、ISO 26262 ASIL B、ASIL D 及 split-lock 等认证。

Essential 系列

SiFive Essential™ 系列提供多款经过硅验证的预设嵌入式 CPU 内核,同时支持通过 SiFive Core Designer 工具按需配置处理器,以满足特定市场和应用需求。

Performance 系列

SiFive Performance™ 系列 RISC-V 处理器专为实现最大吞吐量与性能效率而设计,可覆盖广泛应用场景,从数据中心工作负载 (如 Web 服务器、多媒体处理、网络/存储) 到需要 AI 功能的消费类应用 (如电视、可穿戴设备及其他智能终端)。