SiFive 博客

来自 RISC-V 专家的最新洞察与深度技术解析

The Revolution Evolution Continues - SiFive RISC-V Technology Symposium - Part II

During the afternoon session of the Symposium, Jack Kang, SiFive VP sales then addressed the RISC-V Core IP for vertical markets from consumer/smart home/wearables to storage/networking/5G to ML/edge. Embedding intelligence from the edge to the cloud can occur with U Cores 64-bit Application Processors, S Cores 64-bit Embedded Processors, and E Cores 32-bit Embedded Processors. Embedded intelligence allows mixing of application cores with embedded cores, extensible custom instructions, configurable memory for application tuning and other heterogeneous combination of real time and application processors. Some recently announced products is Huami in Wearable AI, Fadu SSD controller in Enterprise, Microsemi/Microchip upcoming FPGA architecture. Customization comes in 2 forms, customization of cores by configuration changes and by custom instructions in a reserved space on top of the base instruction set and standard extensions, guaranteeing no instruction collision with existing or future extensions, and preserving software compatibility.

Palmer Dabbelt, SiFive Software lead manager, compared the complexity of x86 instructions to the simplicity of RISC-V. The current state of RISC-V specifications includes:

- User Mode ISA spec: M extension for multiplication, A extension for atomics, F and D for single and double precision floating point, C extension for compressed 16-bit

- Privileged Mode ISA spec: Supervisor, Hypervisor, Machine modes

- External Debug spec: Debug machine mode software over JTAG

Some resources are listed below 1.

Mohit Gupta, SiFive VP SoC IP, covered the SoC IP solutions for vertical markets. SoC/ASIC design is nowadays turning into an IP integration task for cost and design cycle time reasons. No single memory technology is applicable to all designs; power, bandwidth, latency tradeoffs are needed for each custom requirement; SerDes interfaces vary by application and selection is based on power and area optimization. The DesignShare partners provide their differentiated IP at no initial cost for verification and integration, built on top of RISC-V cores and foundational IP helping the development cost and reducing needed expertise.

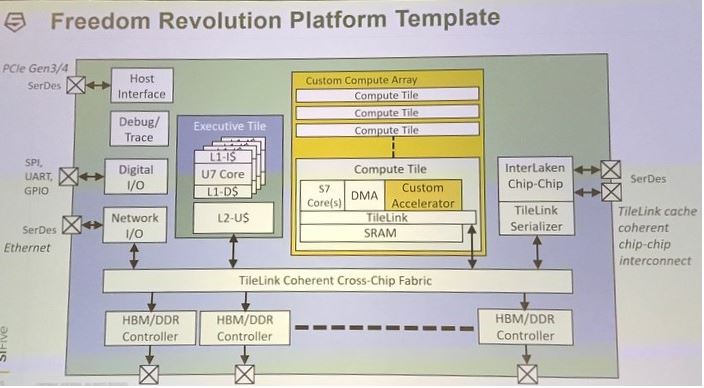

Krste Asanovic, SiFive Co-Founder and Chief Architect, outlined the Customizable RISC-V AI SoC Platform.

The AI accelerator design metrics for 'inference at the edge' are cost/performance/power; for 'inference in the cloud' latency, throughput/cost matter most. For 'training in the cloud', the only metric that matters is performance.

In order to lower cost, power and service latency, Unix Servers can be dropped and replaced by self-hosting accelerator engines along SiFive RISC-V Unix multi-cores.

SiFive's Freedom Revolution consists of:

- High-bandwidth AI and networking applications in TSMC 16nm/7nm

- SiFive 7-series RISC-V processors with vector units

- Accelerator bays for custom accelerators

- Cache-coherent TileLink interconnect

- 4-3.2 Gb/s HBM2 memory interface

- 28-56-112 GBs SerDes links

- Interlaken chip-to-chip protocol

- High speed 40+ Gb/s Ethernet

In development E7/S7/U7vector and custom extensions, accelerator bays, 3.2 Gb/s HBM2 in 7nm and higher performance RISC-V processors.

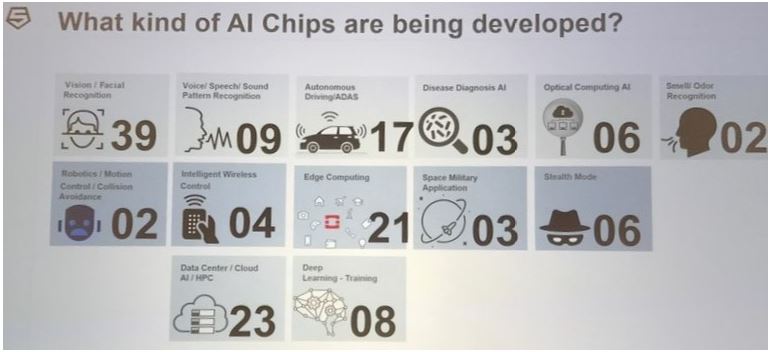

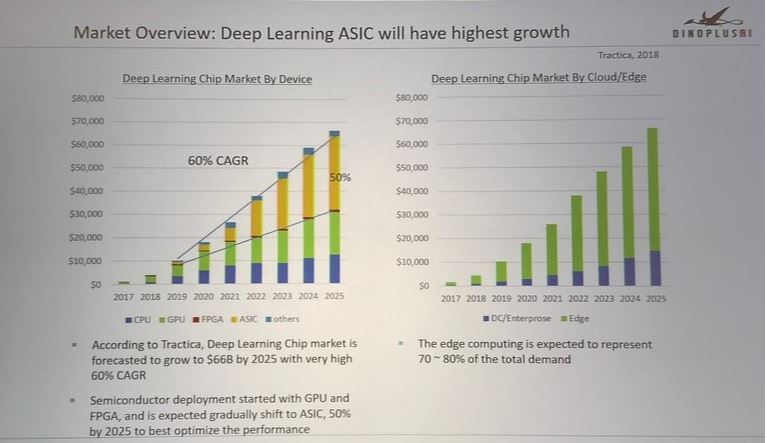

Jay Hu, CEO DinoplusAI, rounded out the presentations' portion of the Symposium. He provided a market overview that showed Deep Learning ASIC having the highest growth

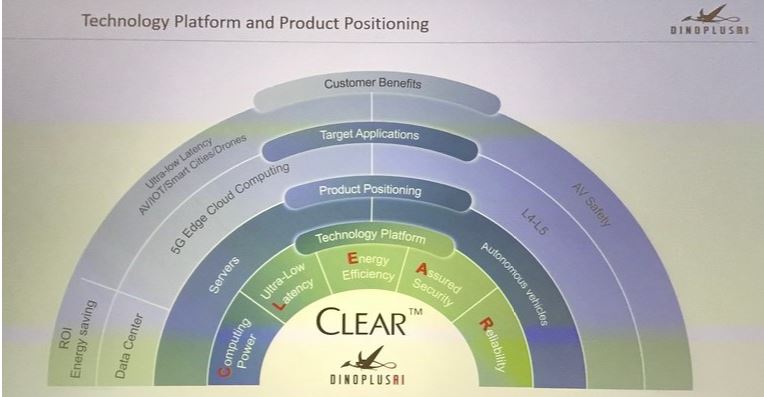

He emphasized that 5G Edge cloud computing and ADAS/Autonomous driving require predictable and consistent ultra-low latency (~0.2ms ResNet 50), high performance and high reliability high precision inference (the CLEAR diagram summarizes the technology platform and positioning).

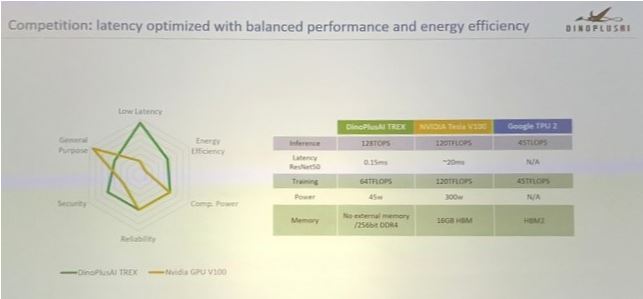

DinoplusAI provided a latency comparison with NVIDIA Tesla V100 and Google TPU 2

___ [1] Software Resources: Fedora, Debian, OpenEmbedded/Yocto, SiFive blog

Part II

(Part I can be found here)